Understanding OpenAI's Temperature Parameter

The OpenAI temperature parameter can be provided to any of the GPT family of models.

It's very useful, but it's not all that self-explanatory.

Temperature is a number between 0 and 2, with a default value of 1 or 0.7 depending on the model you choose.

The temperature is used to control the randomness of the output.

When you set it higher, you'll get more random outputs. When you set it lower, towards 0, the values are more deterministic.

Sentence Completion Example

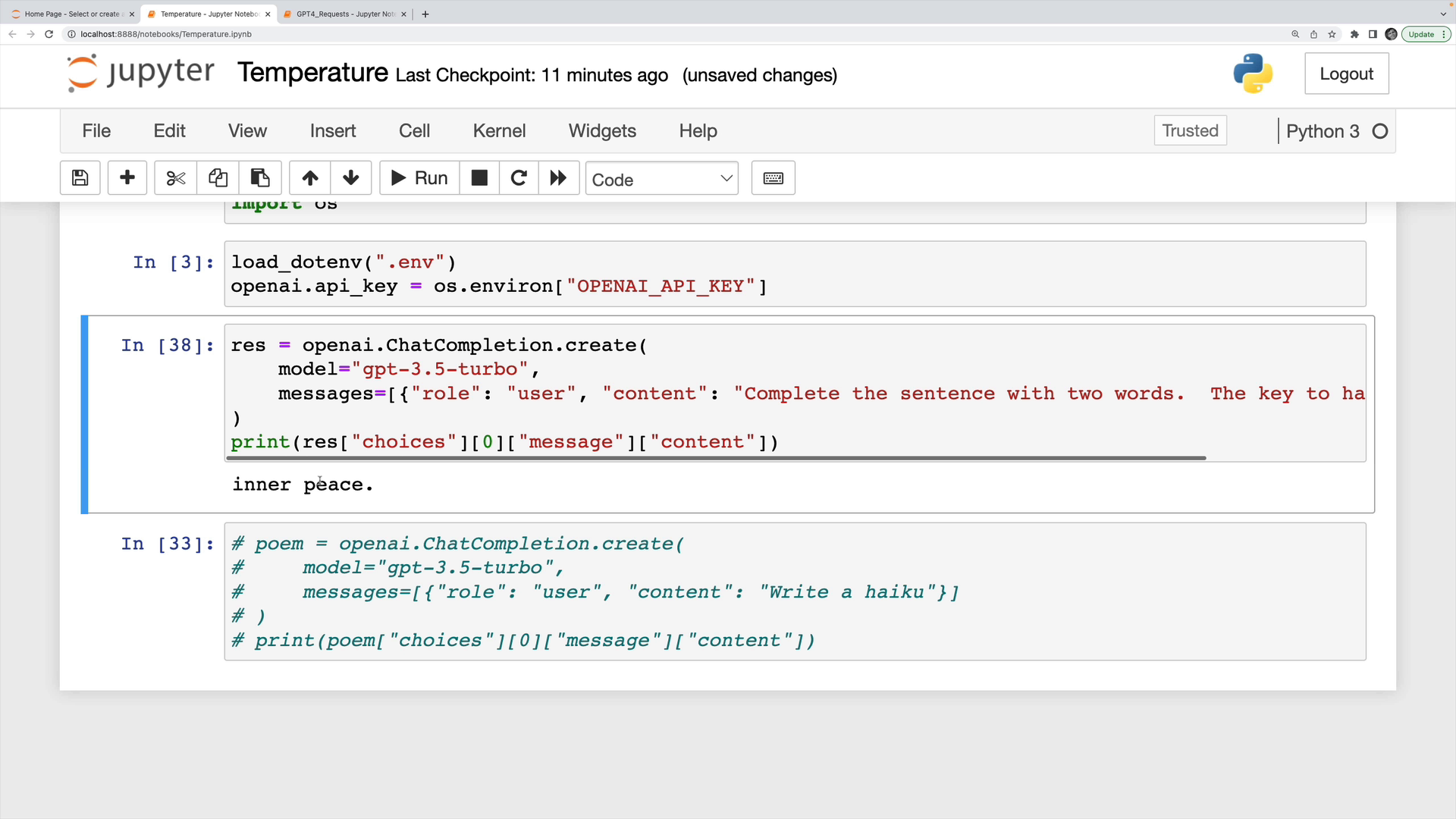

For this example, we're working in a Python notebook, but this would easily work with JavaScript as well.

We have an environment variable for the OpenAI API key, and we're making a very simple completion using gpt-3.5-turbo.

The message we send asks the model to complete the sentence "the key to happiness is" with two words.

res = openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=[{"role": "user", "content": "Complete the sentence with two words. The key to happiness is"}])

With the default temperature, running this will usually return "contentment and gratitude" or "inner peace".

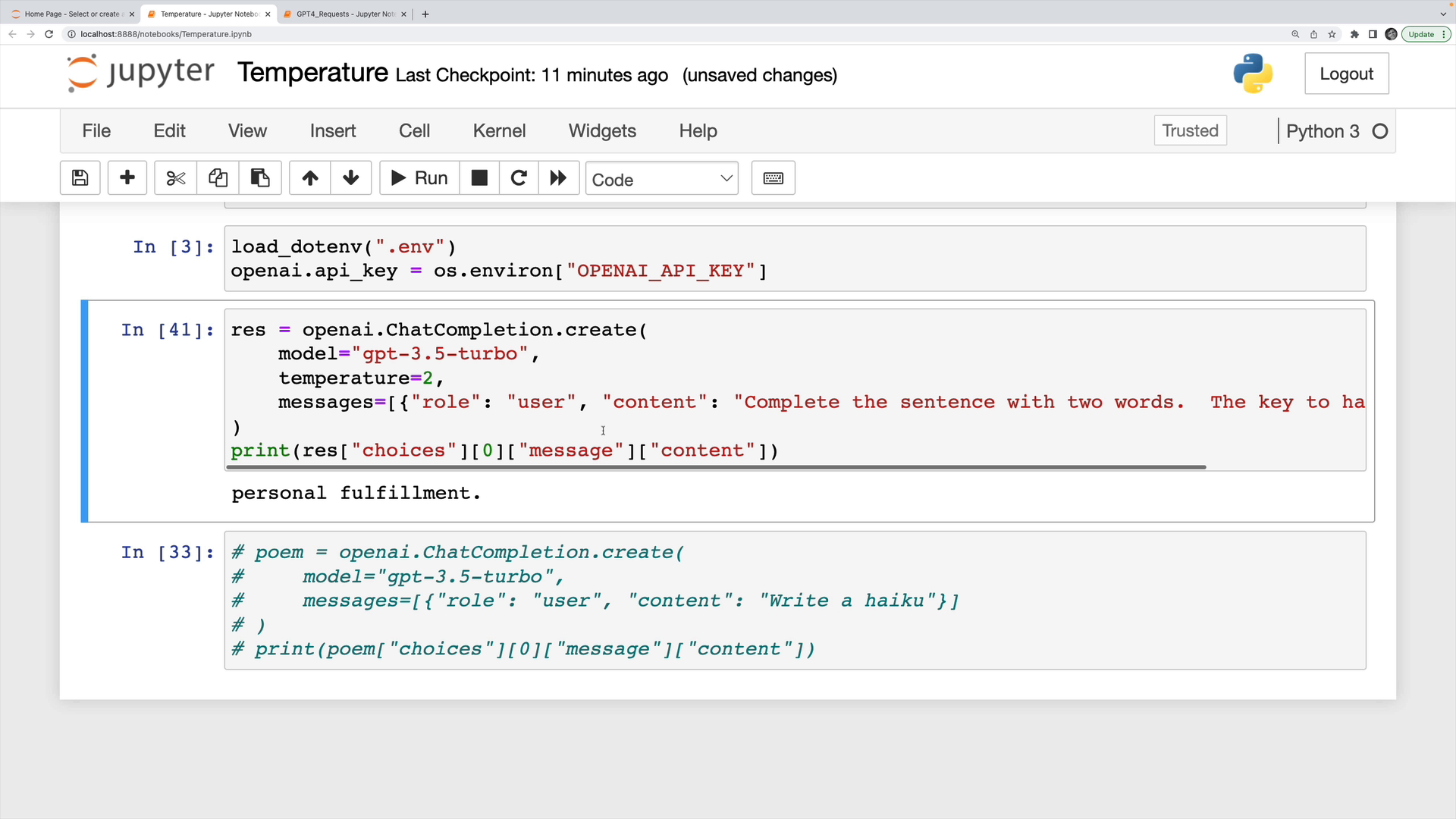

Increasing and Decreasing the Temperature

If we increase the temperature parameter to the maximum of 2 by adding `temperature=2, it's going to give me a much more varied output: "personal fulfillment", "simplicity and gratitude", "contentment and balance", "satisfaction and appreciation", "different for everybody", "gratitude and teamwork", "mindfulness and empathy".

Generally I wouldn't recommend increasing the temperature so high, but it works for this example.

Moving the temperature all the way down to zero, it's going to return "contentment and gratitude" pretty much every single time. It's not guaranteed to be the same, but it is most likely that it will be the same output.

Haiku Example

Increasing the temperature will produce more interesting and creative output.

However, going too high has its own issues.

In this example, we ask the model to write a haiku.

res = openai.ChatCompletion.create( model="gpt-3.5-turbo", temperature=0.9, messages=[{"role": "user", "content": "Write a haiku"}])

With the temperature at 0.9, it's going to produce nice haikus that are typically nature-themed:

flowers in the field,dancing in the summer breeze,nature's symphony.

Solitary beeover forgotten blossoms,April cold leaves.

Moving the temperature all the way up to 2, we'll certainly get something different, but it won't always be coherent:

Easy tropical flips-trade palms winds filter music_,without flotates spring waves

First of all, this is not a haiku. It also starts introducing some very weird stuff like underscores and a made up word.

The output can get even more strange:

It doesn't make any sense.

This is why I generally recommend sticking with a temperature between 0 and 1.

How Temperature Works

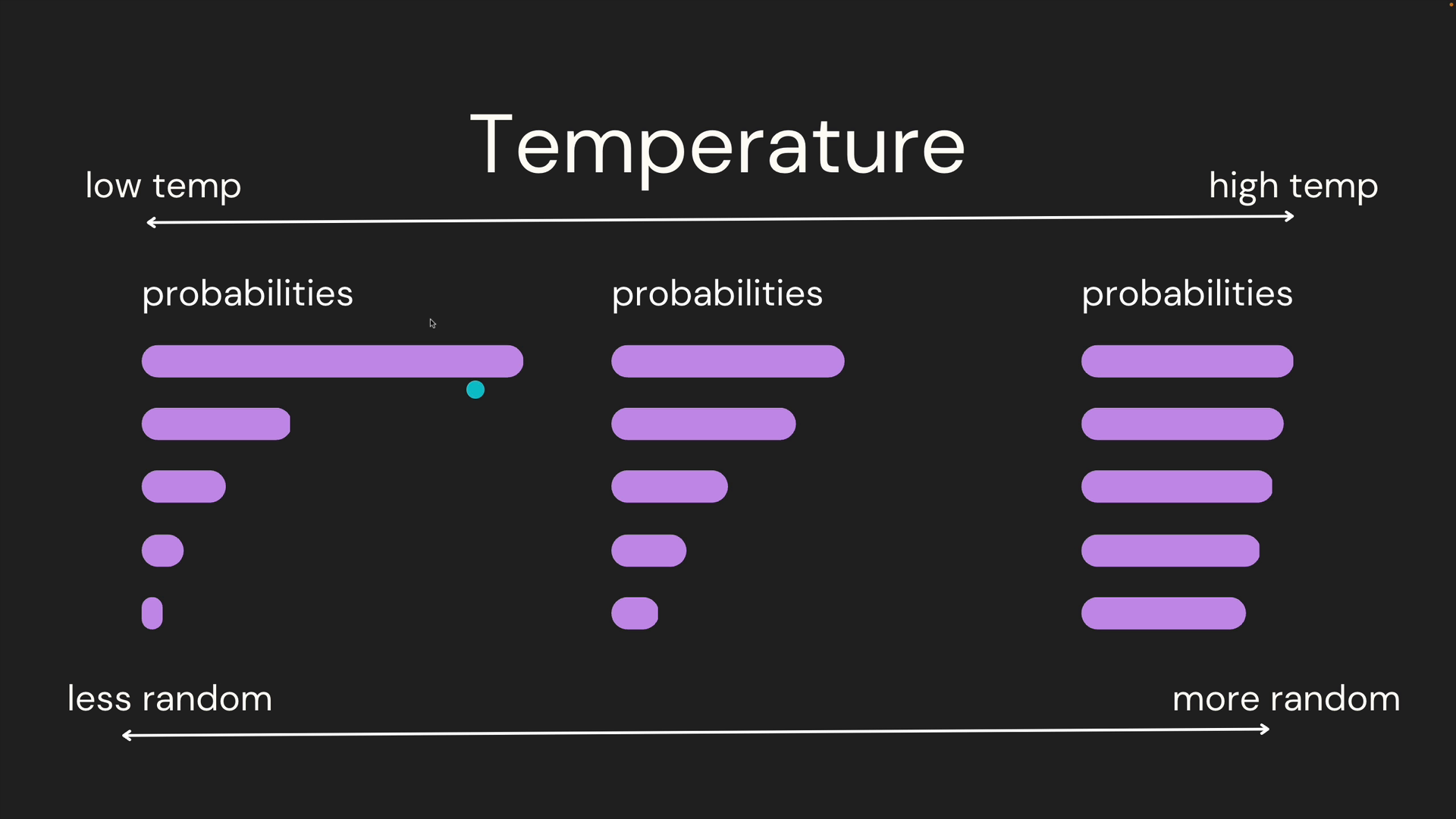

Without going into too much detail, there's some math behind the scenes of the temperature.

Basically, the temperature value we provide is used to scale down the probabilities of the next individual tokens that the model can select from.

With a higher temperature, we'll have a softer curve of probabilities. With a lower temperature, we have a much more peaked distribution. If the temperature is almost 0, we're going to have a very sharp peaked distribution.

Here's a visualization:

Recommended Temperatures

I recommend starting somewhere around 1 for the temperature.

If you need something to be more deterministic, you can decrease it. If you want some spicier results, you can increase it above 1.

Just remember, if you go too high, you will get some really weird output!